VR Toolkit

Software and hardware made/used by the group

Equipment available

On the hardware side, we are focusing on VR headsets, which offer excellent immersion at a reasonable cost. We currently have available

- HTC Vive (x2)

- HTC Vive Pro (x2, one with wireless adapter)

- Oculus Quest

On the software side, we are developing our prototypes using the Unity 3D game engine. Rather than extending existing astronomy software to use advanced displays, we have taken the approach of implementing scientific visualization into a software that already takes care of technical aspects like stereoscopy or user tracking.

Prototypes produced

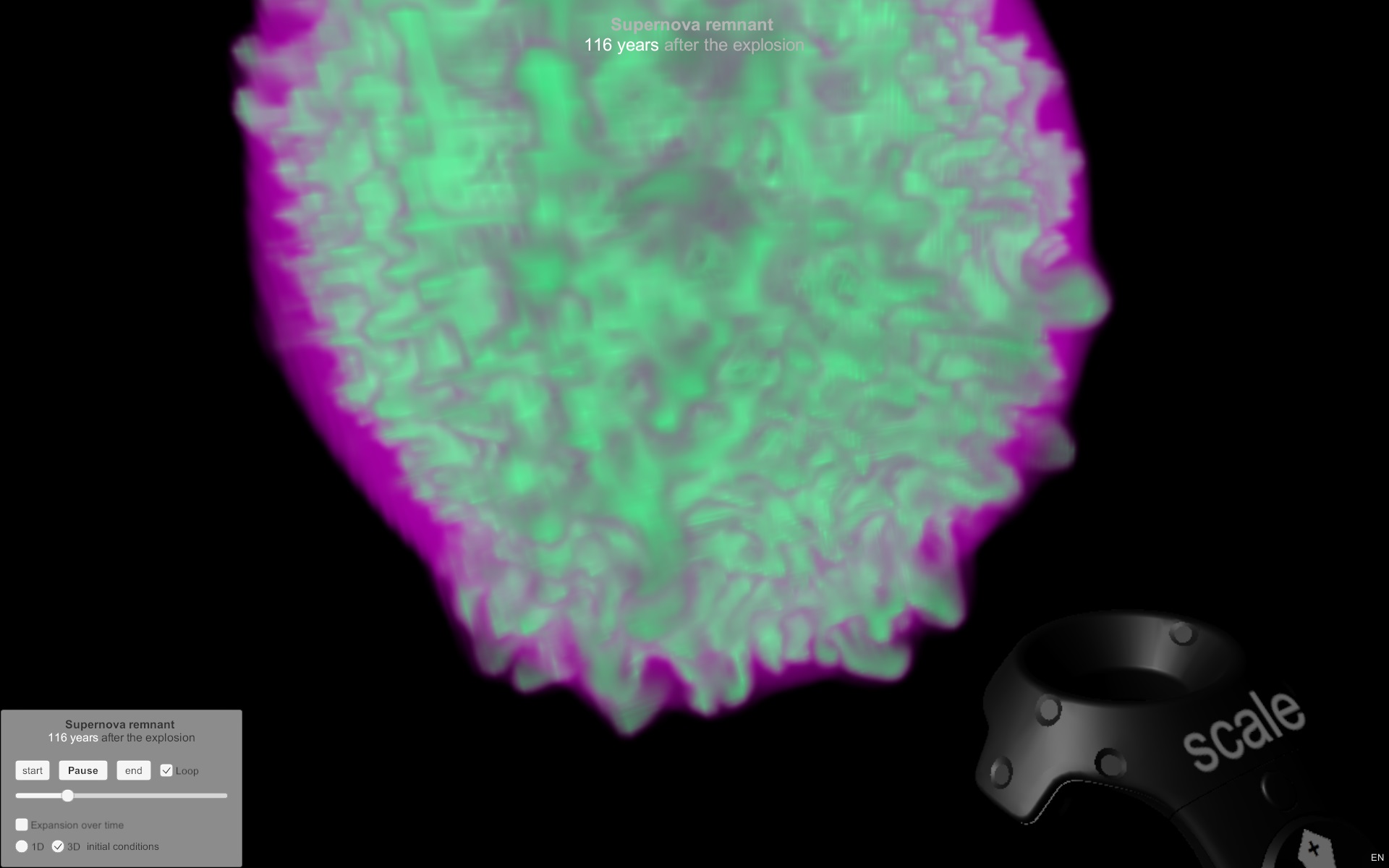

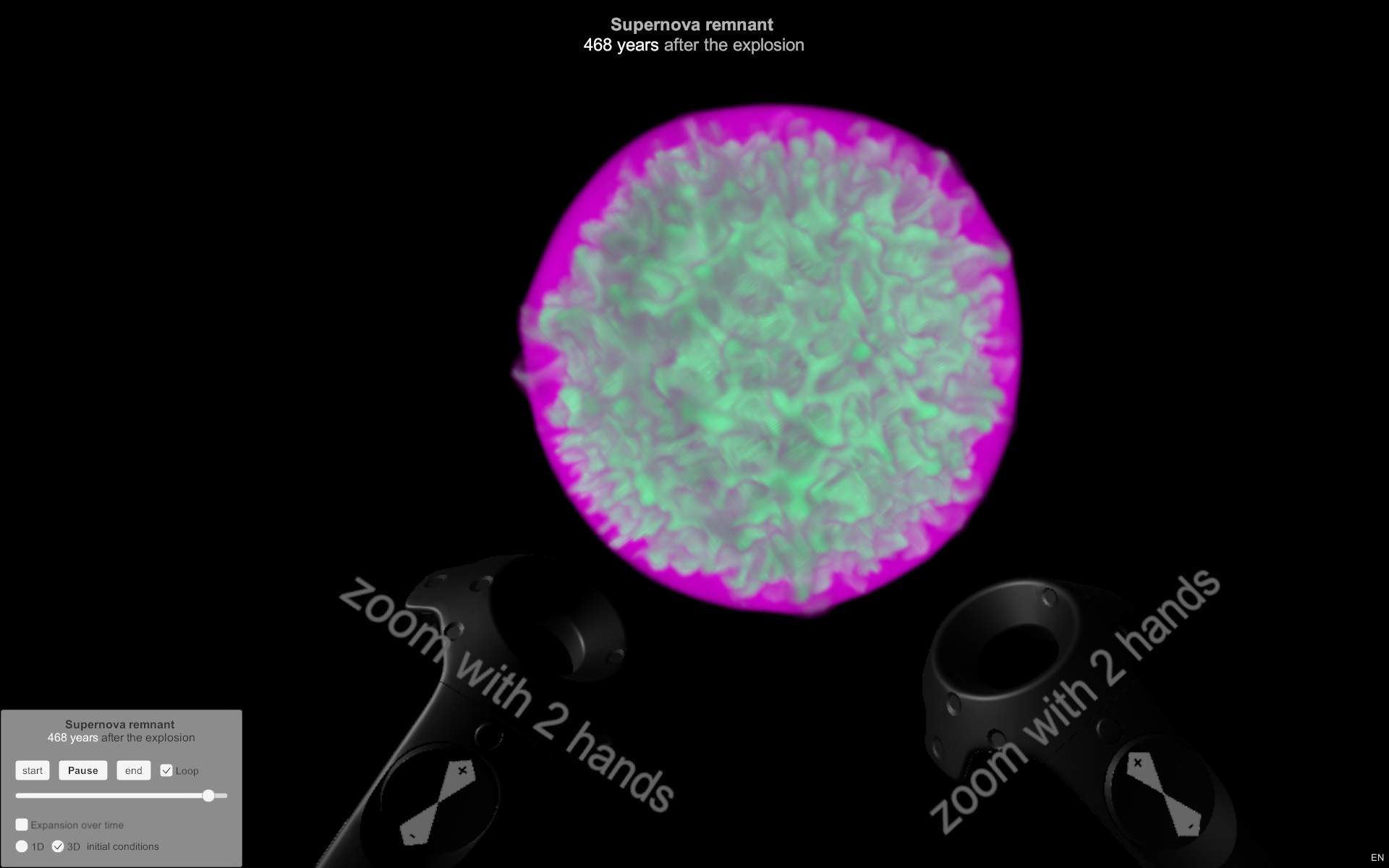

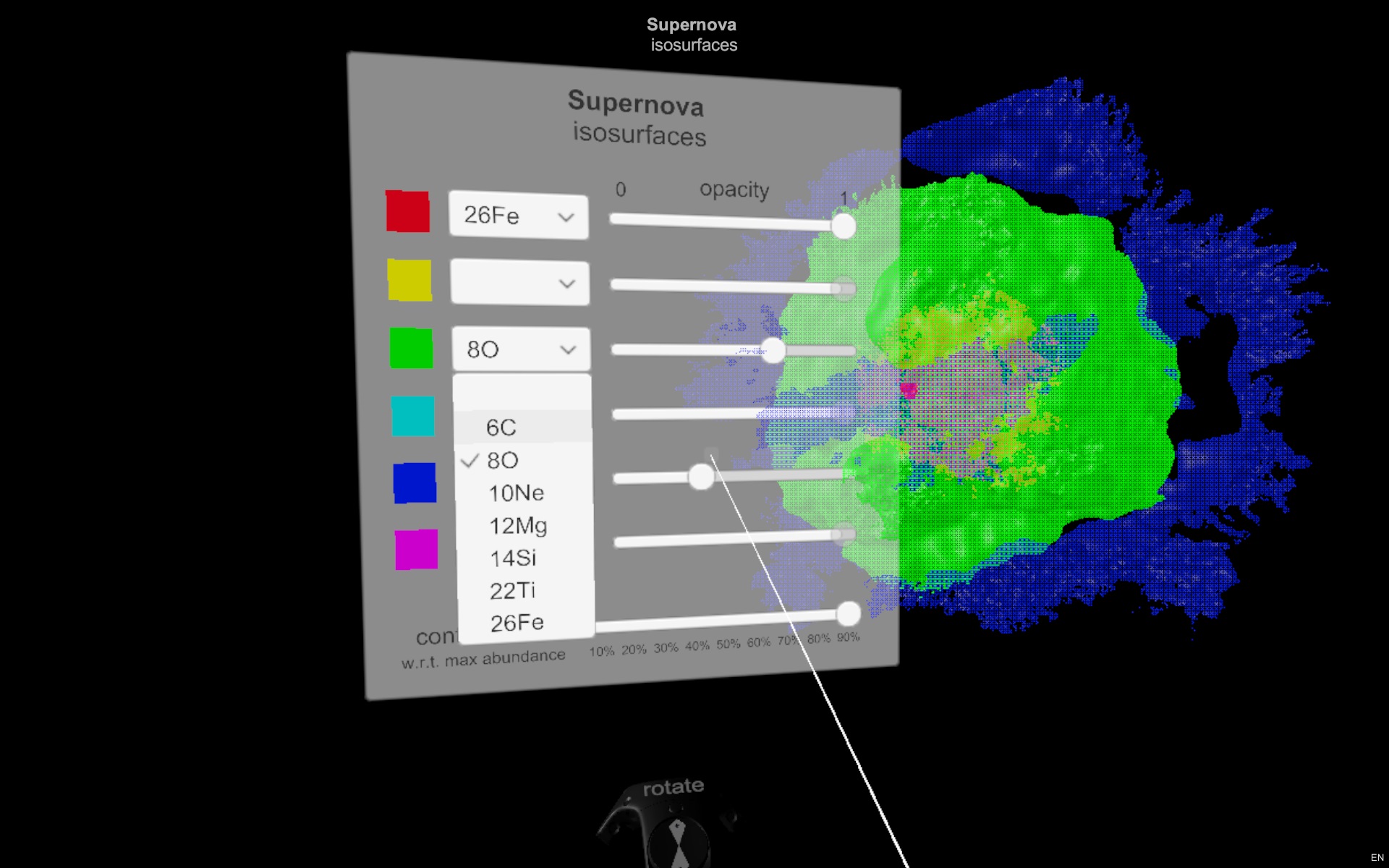

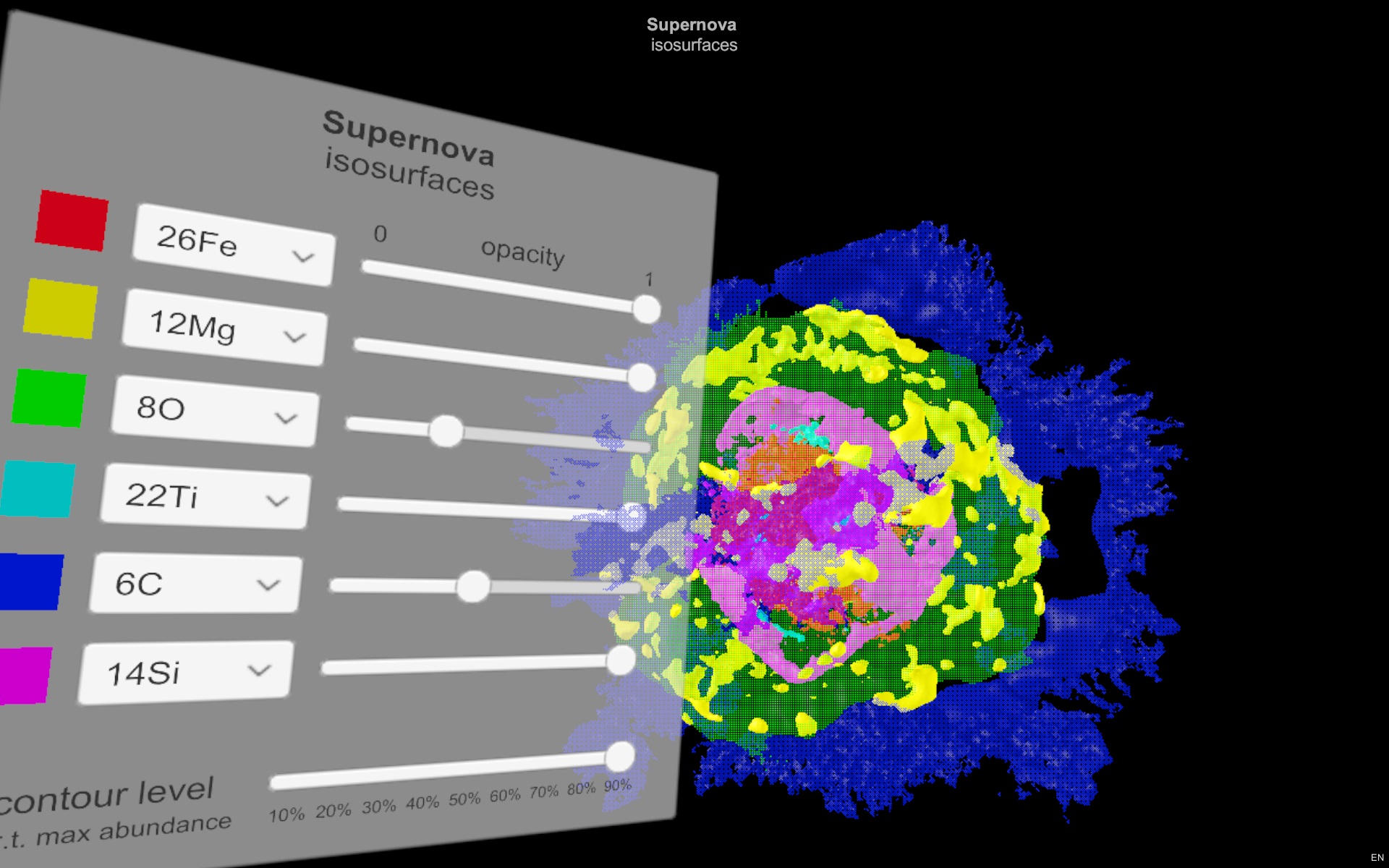

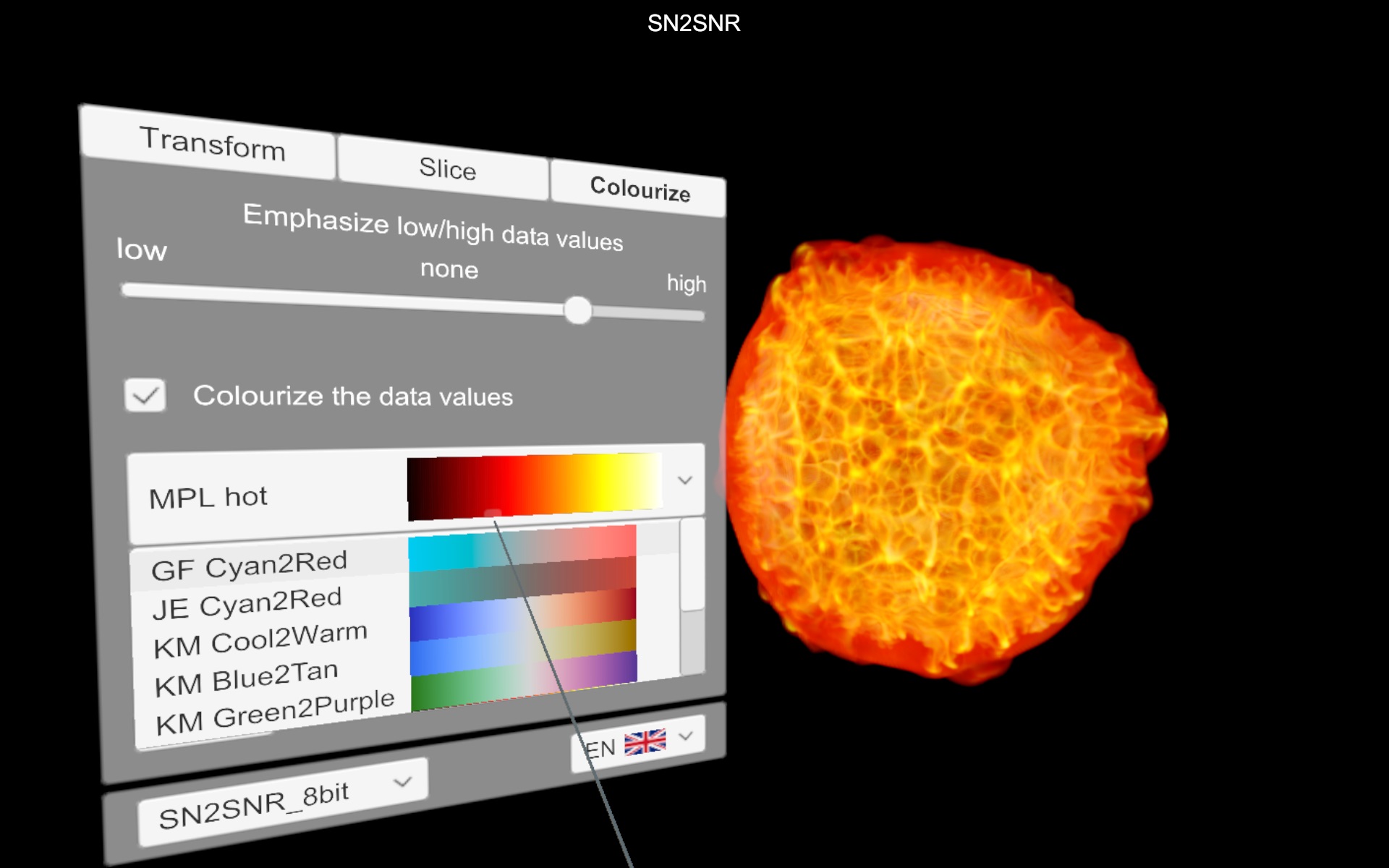

SN2SNR

Cube2

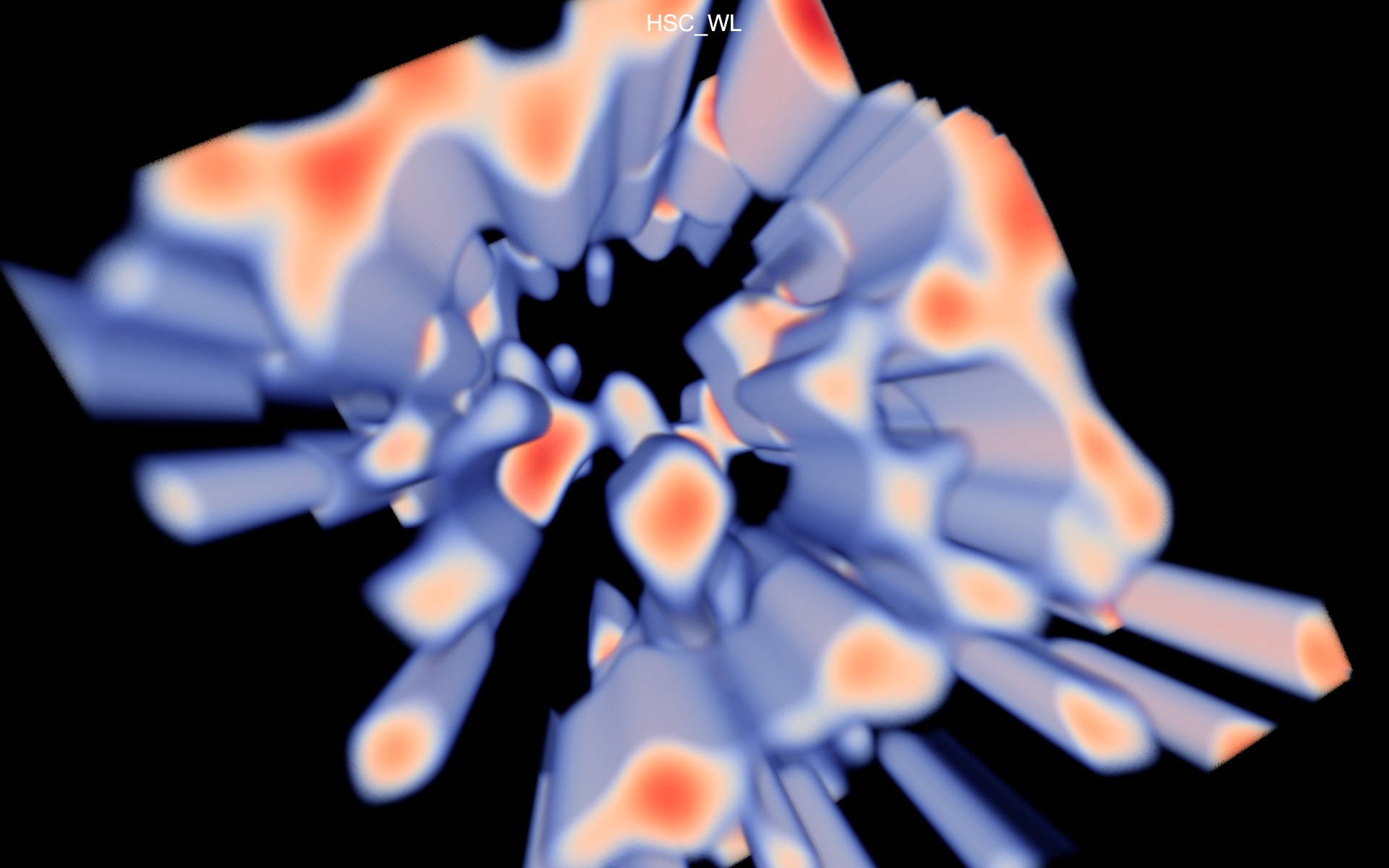

This product has been tested with both simulation data (SNRs, large-scale-structure simulations), and observational data (galaxies in HI, stellar outflows in HI, dark matter distribution in the nearby universe).

(PointData)

This prototype is still largely unpolished and unfinished. It could be revisited and made into a more versatile and functional app.

Sketchfab

Techniques

Visualization methods

- For volume-rendering, we perform live ray-casting on the GPU. Real-time rendering is necessary for a VR experience where the user controls the viewpoint. The data cube is loaded in memory as a 3D texture, after a conversion step from binary format. A demo of the implementation, using the Unity engine, is available on github. Doing a volume-rendering with custom code gives us complete freedom of the transfer function, which maps the data value(s) to the color and opacity of each pixel on the screen.

- For isocontouring (showing surfaces defined by points of equal value), we use meshes pre-generated with scientific software(such as scikit-image or VisIt) and loaded as OBJ files. Then in Unity we have to define the rendering with the choice of materials (color, opacity) and lighting.

- (Experimental) For point clouds, simple objects are placed in 3D space according to some attributes, with shapes and colors definable according to other attributes. The list of points is parsed from a CSV text file.

As of now we have not looked into techniques appropriate for higher-rank data, e.g. vector fields or tensor fields.

User interactions

The data being shown is fixed ahead of time, but custom user interactions must be implemented in Unity (via C# scripts) so that the user can manipulate the 3D model, and/or control its rendering. VR headsets rely chiefly on a pair of hand-held controllers that are represented in the same space as the user and the data. Having a natural UI is therefore important to have people actually want to use the new system.

- Our demos offer the following interactions with the data cube: 1-handed rotation (around a free axis), 1-handed scaling (from the model center), 2-handed zooming (from a selected point), and free grabbing. Controlling the transformation, e.g. scaling or rotating, can be done using the touchpad (a familiar, 2D gesture) or by hand motions (3D gestures).

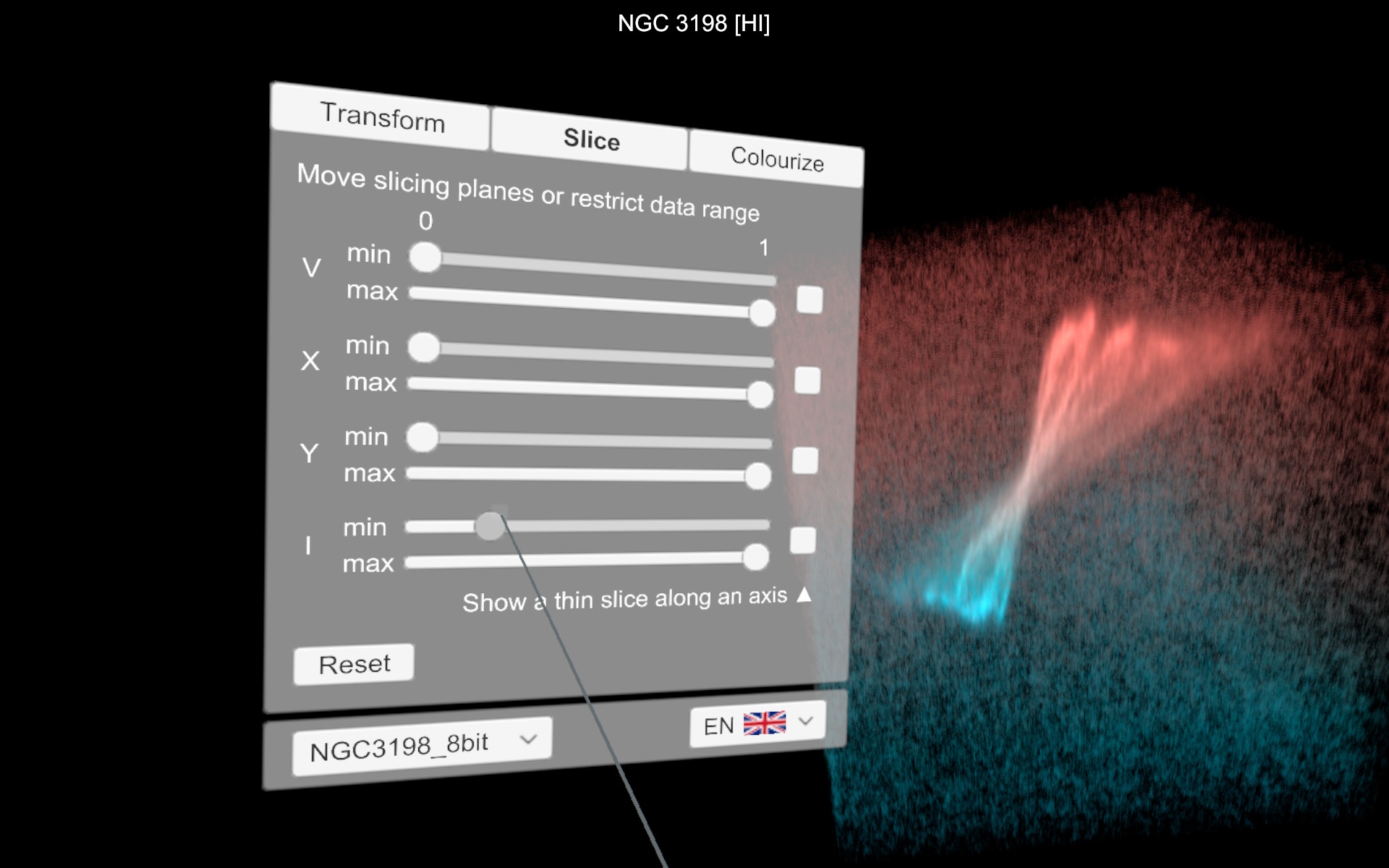

- To offer more complex interaction options, we make use of a traditional (2D) GUI that works both on the desktop's screen and in the VR world. The GUI panel can be toggled on/off at the user's request, and we attach it to the user's hand following the painter's palette metaphor (also used in Tilt Brush). The user can also interact with the GUI controls by point-and-click, using a virtual laser pointer.

Immersive 3D brings its own set of opportunities and challenges, which we will keep exploring with the help of computer scientists. Our current prototypes are appropriate for the phase of data exploration. Lines of future investigation include allowing the user to easily perform quantitative analysis on the data.